At last! Lets talk about what the Theory of Control means for AI.

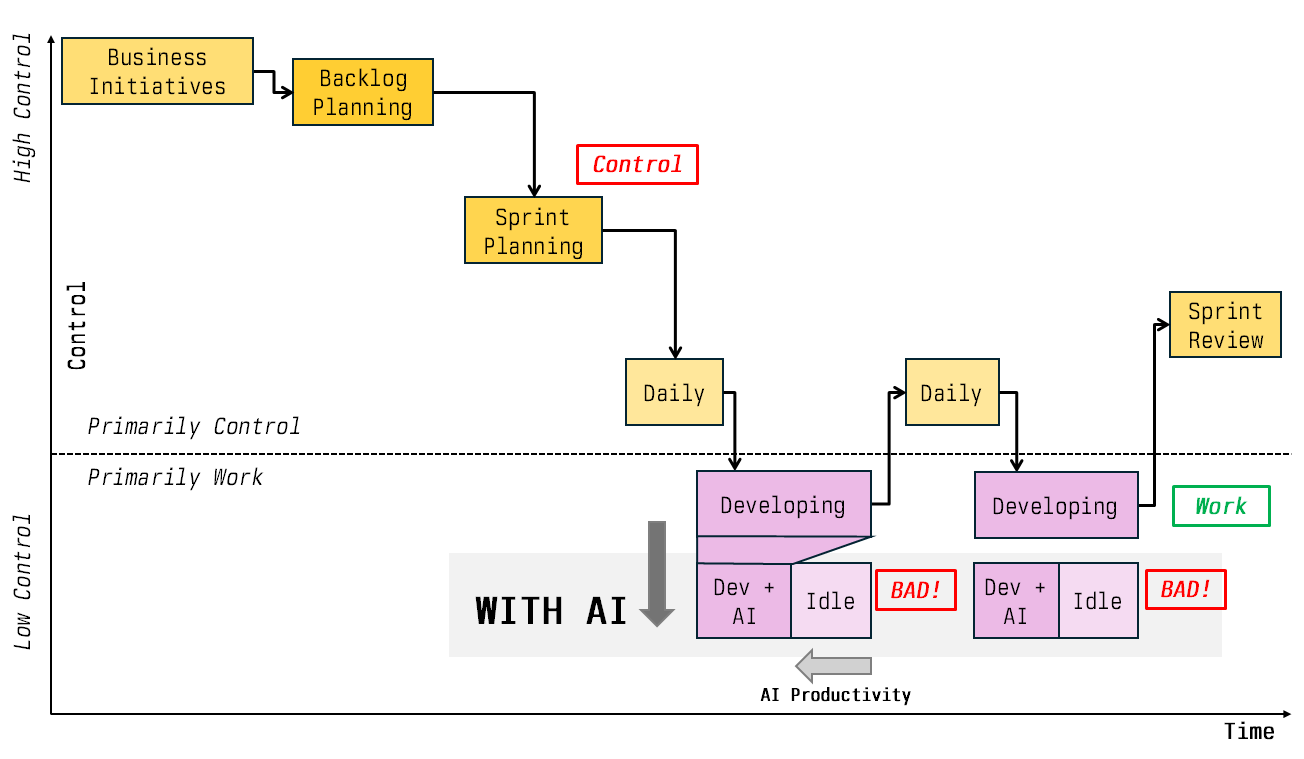

The Theory of Control explains software development through the lens of control. In software development we have two kinds of activity: Those that do the work (e.g. Developing) and those that control how the work is done, what work is done, how fast work is done (e.g. Sprint Planning or Daily Standup).

When we replace Developing with more and more AI we get a speedup of software development. But just replacing tools with AI, and keeping the rest the same, productivity gains are limited, because the Control parts of your process dominate and limit all productivity gains.

Control is your new bottleneck.

In “Limits to AI Productivity Gains - Amdahl's law describes how much you can gain from AI” I explained how Amdahl’s law limits productivity gains from AI. Amdahl’s law is about optimizing tasks. You have two types of tasks, those that you can optimize and those you can’t. In this context, those that you can speed up with AI (e.g. work tasks) and those you can’t (won’t) speed up with AI (e.g. control tasks). Your maximum speedup is then limited more and more by the tasks that you can’t speed up. In this picture we speed up coding 3x but speed up general performance only 2x. The general speedup is limited by the blue parts.

You might say, constraining software development by control has always reduced productivity and impact in one way ore the other - nothing new there. That constraint sky rockets with AI though. To get the most of out of AI, you need to minimize control.

Going back to our picture of a simple process from above, with control and work elements. When we speed up those work elements massively by AI, and do not change the control elements (time, frequency), developers will be more and more idle.

You may shout: “Devs will not be idle”. Yes, not idle in the way of twiddling their thumbs. But idle in the sense of working on the second most important thing instead of the most important thing. Idle in the way of gold plating. Idle in the way of introducing a new library that is not necessary. Idle in the way of looking at that new programming language. Idle in the way of refactoring that is not needed, refactoring that goes around in circles. I call that the productivity YoYo. All productivity will be filled up with work if do not manage the gained time.

If we remove control, we can speed up the delivery of features. In this case, when we remove the control of Daily standups, the feature is finished earlier. We need to give up control to make that productivity and performance improvement.

Why stop there? We can remove even more control from our process. We might see that with AI the Sprint Planning, Backlog Planning and Sprint Review are accidental to the problem of developing software. They break down business initiatives and define what, how and when something is done, controlling the work. Removing them because the AI has the freedom to do the right thing, we only keep the planning of Business Initiatives as a control element for the AI. We get what we want much faster.

Increasing productivity with AI and removing control structures, leads to an increase in the frequency of the element Business Initiatives.

Now Business Initiatives becomes the defining factor, the bottleneck. The increase in frequency will stress the organization and the C-Level of our organization. This will result in more changes to our organization as it reacts to that new stress.

We need to increase the size of Business Initiatives to define bigger impact and broader scope so we can utilize AI more before we come back to Business Initiatives. Getting the most out of AI means to think bigger!

Sounds simple. But there is a reason people add all those control elements. They want to make sure that the right thing is build, in the right order, at the right time and finished at the right time (deadline), with the right effort. So we have all these planning elements, and the standups - to make sure everything is “under control”.

The opposite of control is trust. People doing things without being controlled because someone trusts them. With this in mind we can split work in software development in controlled and trusted.

Giving up control to AI is currently mainly limited by trust. We do not trust the AI to build the right things in the right way. We do not trust the AI that it can change the application in the future, because it does not use clean code. We don’t trust the AI that it builds secure apps. We don’t trust the AI that it builds scalable apps. We don’t trust the AI that it comes up with better ideas than we do. So we limit AIs with control. But this way our need for control limits AI gains.

For the near future, we will see a transformation of control elements to new control elements, to control AI instead of developers. This might be a second AI that checks for security. That might be more manual E2E and acceptance testing. Recently someone asked me “How would you control the AI?” I’ve answered “By looking at the test cases the AI has generated”. Like I trust the compiler to create the right code. If it runs the test and the UI works, I think the compiler did it’s work. So did the AI, without my need to look at the code.

Unless we (can?) trust AIs, control will limit our progression.