Everyone is talking about AI in coding. There is a lot of hand waving and little facts. To remedy the situation I started a journey on what AI can do and can’t do. In small and simple steps so we can depend on them. The way to travel on is Claude Code.

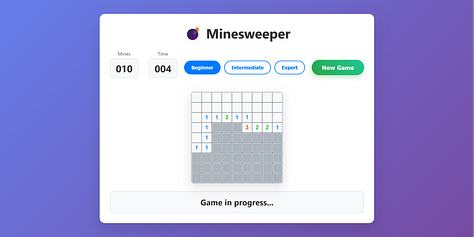

What could be a simple example? A small game. One which many people know, Minesweeper! Minesweeper is a simple game that became famous with older versions of Windows. The player needs to find all hidden mines in a field. The game gives you hints where a mine might be, but if you click on a mine, you’ve lost.

A simple game and I let ChatGPT write a requirements.txt document for Minesweeper and Claude Code implement it. You can read about that experience here.

That worked wonderfully. For some cents I got a playable web game of Minesweeper.

But we might wonder, was that a fluke? What happens if we change the requirements document and let Claude Code re-create the application? Do we get that same application? Do we get something different?

So I run Claude Code ten times on the same requirements, and here is what I’ve got:

Ten totally different games (nine shown because of Substack). Each one looks different than the others. And they all play and feel differently. Some feel more difficult, some feel easier. Some have obvious bugs, and some didn’t - can you spot the bugs? Some don’t show flags to display your mine suspicions for example.

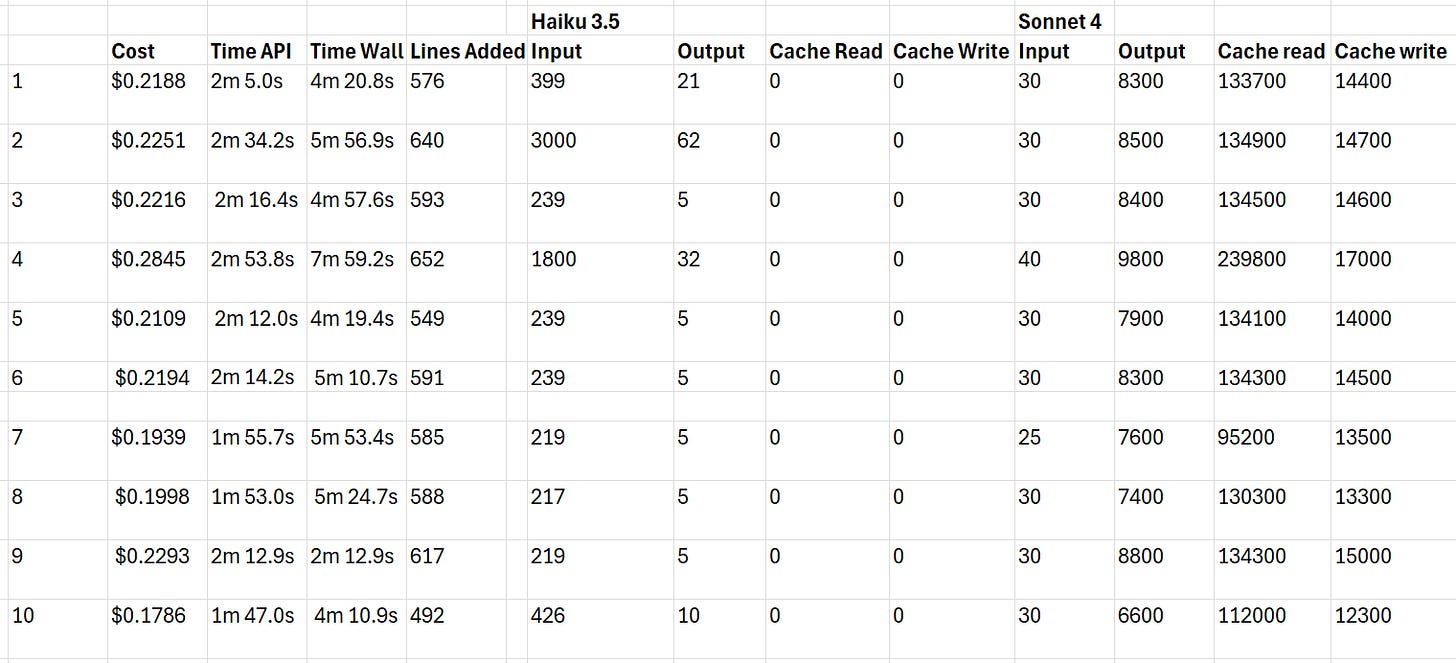

How did the generation of code differ between the different versions?

The generation times range from 1m 47s to 2m 53s (+62%).

The costs range from $0.18 to $0.28 (+55%).

Claude Code mostly used Sonnet 4. Token output ranges from 6600 to 9800.

The LOC of the solutions range from 492 to 652 (+33%).

We see big differences in cost and generated solution size, which do not correlate to the quality of the version of the game (see below).

Of course I’ve asked Claude Code to compare all 10 different implementations (cost $0.96). And they differ wildly, not only in their appearance. Implementing each game, Claude Code used

Different game algorithms

Different internal cell/mine representation

Different features

Different visuals

This shows several things.

First, Claude Code does not, as would be expected, consistently create the same game, visually or game wise. We’ll need to look in the future on how to specify visuals with mock screenshots to force Claude into consistent visuals.

Second, it adds features randomly that it thinks should be there (touch support) - going beyond your requirements. If you read “Unique Implementation Highlights” below, you can see the randomness of features.

Third, the code quality differs between implementations. You can’t expect the best code quality. In further experiments I will take a look on how much you need to put in your prompt, or structure your prompt.

Overall if we want to use Claude Code for one-shotting, generating code from requirements, we are not yet there with technology. Claude Code creates different applications each time it is run on the same requirements. While Vibe coding (change one code base by prompting) has problems with cooperation between developers and the amount of code to review, the more promising one-shotting of application struggles with consistency. I will experiment in the future to see how we can create more consistent results with Claude Code and also compare it to OpenAI Codex, Google Jules and Amazon Q coding agents #StayTuned

Here is the result from Claude Code analyzing itself, with more details (with some mistakes, if you can spot them).

Minesweeper Implementations Comparison

Overview

This document compares 10 different Minesweeper game implementations (minesweeper-1 through minesweeper-10) analyzing their HTML structure, JavaScript logic, visual design, and functionality. Note that minesweeper-7 only contains HTML and images but no JavaScript implementation.

Visual Design Analysis

Design Patterns

Classic Windows Style (v1, v3, v7, v8, v9, v10)

Gray (#c0c0c0) backgrounds

Inset/outset borders for 3D effect

Black displays with red text

Traditional smiley face buttons

Modern Gradient Style (v2, v4, v5, v6)

Purple gradient backgrounds

White rounded containers

Colored buttons with modern styling

Clean typography

Visual Elements

Mine Counter Display: All use black background with red monospace font

Timer Display: Consistent across implementations

Grid Rendering: Canvas-based with consistent cell sizing

Flag Representation: Mix of 🚩 emoji and red triangular markers

Mine Representation: 💣 emoji or black circles

Layout Variations

Compact Layout (v1, v8, v9, v10): Minimal spacing, efficient use of space

Spacious Layout (v2, v4, v5, v6): Generous padding, modern card design

Traditional Layout (v3, v7): Classic Windows-style arrangement

Technical Implementation Comparison

Architecture Patterns

All implementations use:

ES6 class-based architecture

Single main game class

Canvas-based rendering

Event-driven interaction model

Code Organization Quality

Best Organized: v6, v8

Clear method separation

Consistent naming conventions

Well-structured event handling

Most Feature-Rich: v1, v9

Touch support

Advanced UI interactions

Comprehensive feedback systems

Cleanest Implementation: v5, v10

Minimal but complete functionality

Good code clarity

Efficient algorithms

Data Structure Approaches

Object-Based Cells (v1, v2, v4, v8, v10)

{

isMine: boolean,

isRevealed: boolean,

isFlagged: boolean,

adjacentMines: number

}

Separate Arrays (v3, v5, v9)

revealed[][]

flagged[][]

board[][] // with numeric values

Set-Based (v6)

Set objects for mines, revealed, flagged

Mine Placement Algorithms

Array Shuffling (v2, v4)

Fisher-Yates shuffle of all positions

Select first N positions for mines

Random Selection with Exclusion (v1, v3, v5, v6, v8, v9, v10)

Generate random coordinates

Exclude first-click area

Retry on duplicates

Safe Zone Approach (v6)

Excludes 3×3 area around first click

More sophisticated safety guarantee

Feature Comparison

Core Functionality

Advanced Features

UI Control Variations

Button-Based Difficulty (v1, v2, v4, v6, v8, v9)

Individual buttons for each difficulty

Visual active state indication

Immediate difficulty switching

Dropdown Selection (v3, v5, v10)

Space-efficient selection

Shows all options with descriptions

Traditional form control

Mixed Controls (v7)

No JavaScript implementation

HTML-only structure

Performance and Optimization

Rendering Approaches

Full Canvas Redraw: Most implementations clear and redraw entire grid

Individual Cell Rendering: All use cell-by-cell drawing approach

DPR Handling: v1, v3 handle device pixel ratio for crisp rendering

Memory Efficiency

Set-based implementations (v6): More memory efficient for large boards

Object-based implementations: Higher memory usage but cleaner code

Array-based implementations: Good balance of memory and performance

Event Handling

Optimized coordinate calculation: All implementations use getBoundingClientRect()

Touch event handling: v1, v3, v4, v9 implement duration-based touch gestures

Context menu prevention: Properly handled across implementations

Code Quality Assessment

Best Practices Observed

Consistent naming conventions: camelCase throughout

Proper event delegation: Clean setup and teardown

State management: Clear game state tracking

Boundary checking: Coordinate validation in all implementations

Areas for Improvement

Magic numbers: Hard-coded colors and dimensions

Method length: Some rendering methods are quite long

Code duplication: Similar coordinate calculations repeated

Error handling: Limited error handling in most implementations

Complexity Rankings

Most Complex: v1 (426 lines) - Comprehensive features

Medium Complex: v2, v4, v6, v8, v9 (350-420 lines)

Simplest: v5, v10 (350-360 lines) - Clean minimal implementations

Unique Implementation Highlights

Version 1

Advanced touch handling with duration detection

Comprehensive smiley face feedback system

Device pixel ratio support for crisp rendering

Version 2

Clean modern gradient design

Separate grid rendering optimization

Built-in game instructions

Version 3

Keyboard shortcut support (F2 for new game)

Dropdown difficulty selection

Classic Windows aesthetic

Version 4

Bomb emoji in title (💣 Minesweeper)

Array-based mine storage approach

Modern card-style layout

Version 5

Cleanest minimal implementation

Dropdown with detailed descriptions

Efficient game logic

Version 6

Set-based data structures for memory efficiency

Game controller emoji (🎮 Minesweeper)

Modern flat design approach

Version 7

HTML-only implementation (no JavaScript)

Pure visual mockup

Classic Windows styling

Version 8

Button-based difficulty selection

Clean white container design

Comprehensive win/lose feedback

Version 9

Emoji-rich interface

Comprehensive smiley feedback system

Built-in instruction text

Version 10

Face button with emoji feedback

Simple dropdown selection

Minimal clean implementation

Recommendations

For Learning Purposes

Start with: v5 or v10 (cleanest implementations)

Study advanced features: v1 or v9 (comprehensive functionality)

Understand patterns: v6 (Set-based approach)

For Production Use

Best overall: v1 (features + touch support + polish)

Modern design: v2 or v4 (gradient styling + clean UI)

Lightweight: v5 (minimal but complete)

For Mobile Applications

Best touch support: v1, v3, v4, v9

Responsive design: Most handle mobile layouts well

Touch gesture handling: Duration-based flag/reveal distinction

Conclusion

The 10 implementations demonstrate various approaches to building a Minesweeper game, from minimal functional versions to feature-rich applications with modern UI design. The implementations showcase different architectural patterns, data structures, and user experience approaches while maintaining the core gameplay mechanics.

Key takeaways:

Canvas rendering is consistently used across all implementations

ES6 classes provide good code organization

Touch support significantly enhances mobile usability

Visual design ranges from classic Windows to modern gradients

Code complexity varies from 350-450 lines for full implementations

Feature completeness varies significantly between versions

Each implementation offers unique insights into game development patterns and trade-offs between features, performance, and code maintainability.