The Meta-Token Mental Model For Vibe Coding

Why AI generates code in your code base the way it does

Over the last weeks I have been working hard on Marvai. Marvai is a tool to find, download and install prompts for developer use. Think NPM for prompts.

All of the code was written by Claude Code. And while this worked great for some time, when I wanted to add a feature that depended on the correct flow of data through some goroutines and channels, it didn’t work.

All the analyzing, the chanting of “Now I know what the problem is! It’s clear now!” by Claude didn’t help. It was stuck in a loop. Because the code was so tangled within one 10.000 lines of code file, the AI was no longer able to add code (also see context engineering).

I refactored the code, got the feature working, added more methods, added more tests, added more files, it worked again.

From that point on, Claude also didn’t put all code into one file, but created new files as needed. A lightbulb went off in my head.

Claude created new code that fitted the existing code (no CLAUDE.md / system prompt). And because it started with one file, it added to that file. And because it started with average code, the code deteriorated faster than expected.

LLMs aren’t very difficult to understand on a high level. Simplified they are token predicting machines. You might have seen ‘tokens’ when coding, like in tokens/second, Input tokens, Output tokens or $/million tokens for API costs.

Tokens are the oil that make the AI machine run.

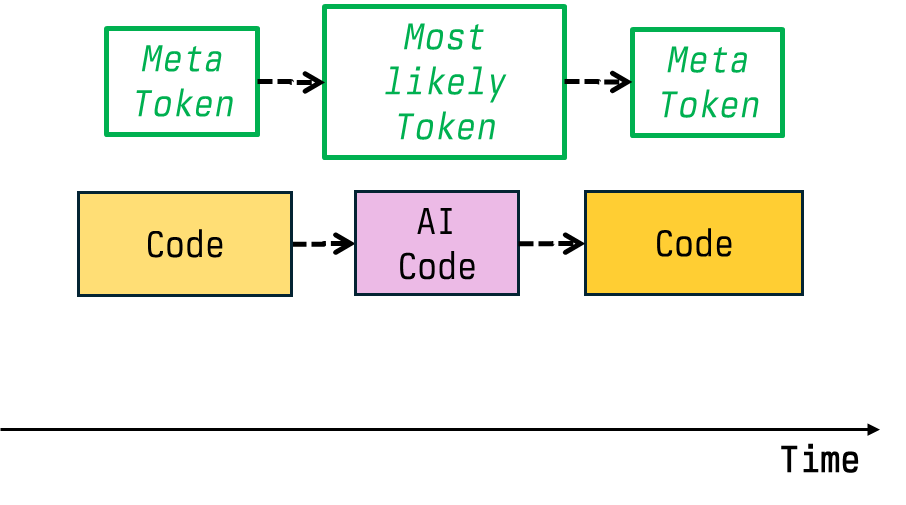

Simplified, AIs (the sub-sub category of LLMs) work by predicting the next token (their output) for a chain of tokens (your input). They predict the most probable next token based on the tokens that came before (varies by parameters, like “temperature”) .

You can imagine generative AI for source code as a transition from one code base to the next codebase.

Then if you look at your codebase, you can view it as one meta-token. Then a feature the LLM generates - the code it generates - is the next meta-token.

I call this the Meta-Token Mental Model of Generative AI vibe coding (what a mouthful).

The code the AI generates is the most probable token, based on your existing code base.

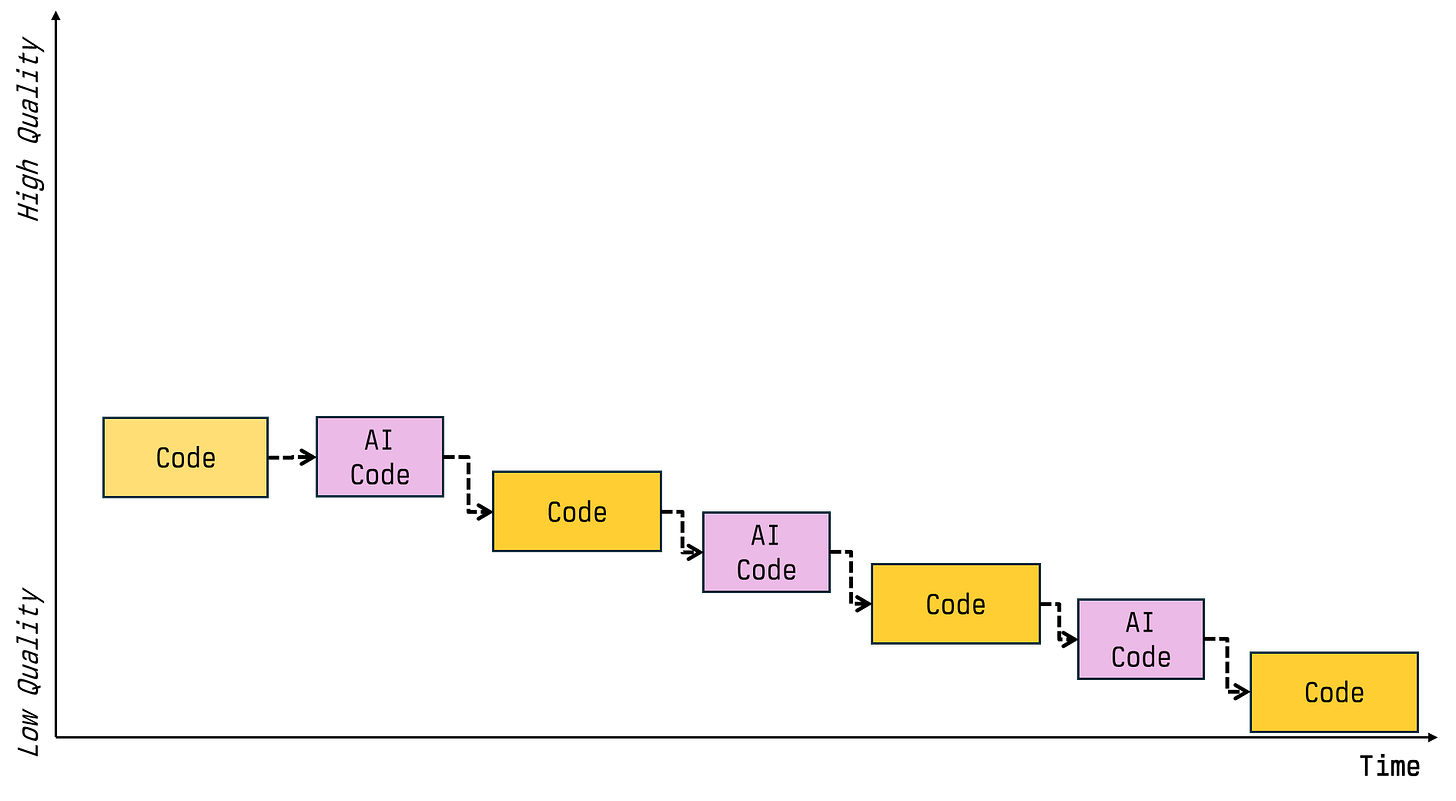

If your code base is bad, the AI will produce code that is the most probable succession of your code. It does follow the average of what it has learned - but it will primarily produce code that fits your code, "the most likely next token”.

As code gets worse over time, and adding bad code to bad code makes it worse faster, the code base deteriorates.

It deteriorates to a point where the AI is not able to add more code without creating bugs - just like humans.

In contrast to human developers most likely you will get stuck in a loop with the AI. The AI produces bad code, then rolls it back, and then adds the same code again, as it is the most probable next token. A little bit different because of your feedback, but essentially the same. You’re stuck in a loop.

What you can do?

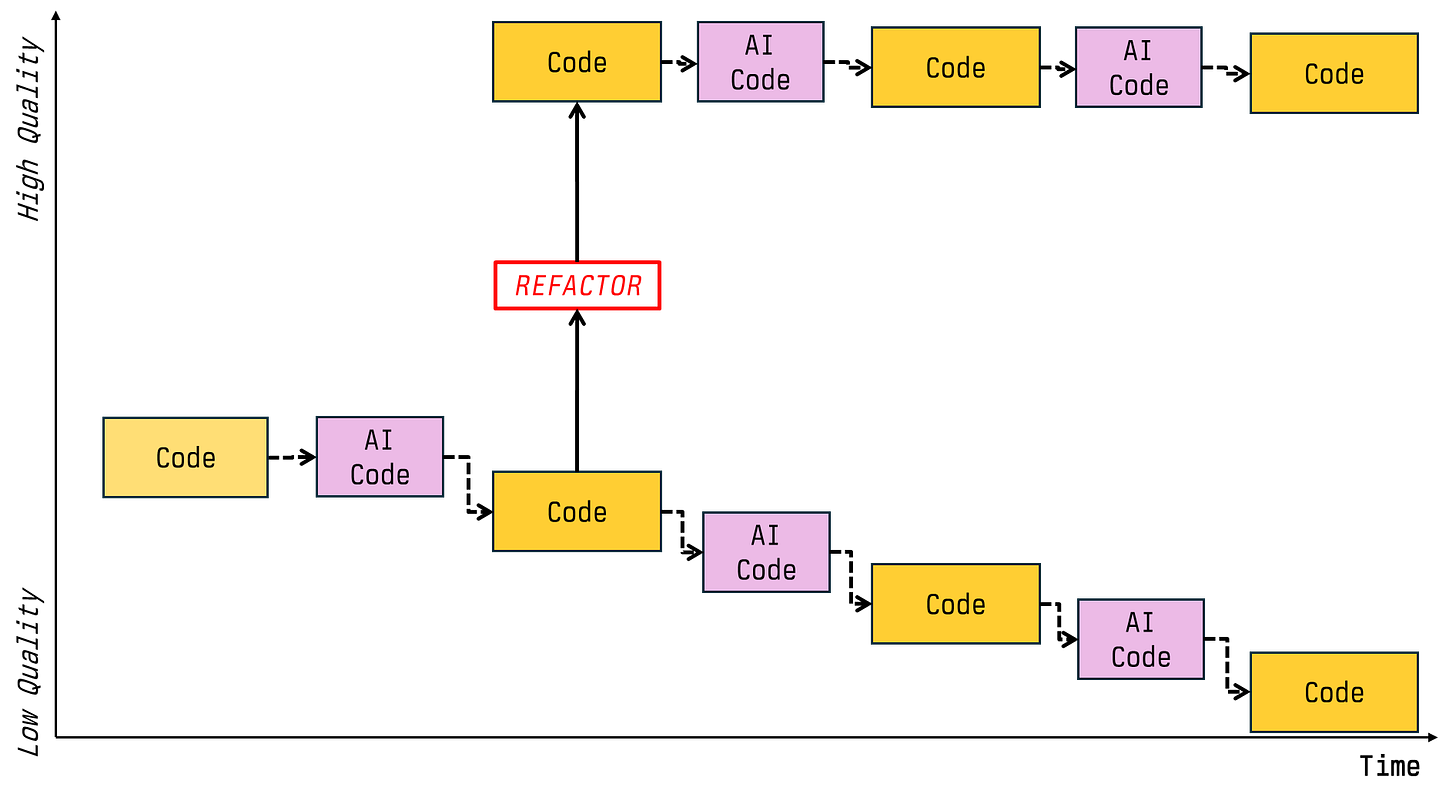

Show the AI what you want!

Let the AI generate code.

Refactor in a way that makes the code look like it should. More files, smaller methods, error handling, exception handling, security, using frameworks instead of writing it’s own code (NIH-syndrome), ….

Let the AI resume generating code.

The new code will fit your refactored code.

If it starts fresh with a blank slate, it does not know in which direction it goes, it will tend to the average.

It’s like chaos theory - on a blank page too many things are possible, and small changes in the beginning lead to large variations over time.

If you lift the quality level, it will produce the code that is most likely the next step for your code base. It does not have an inherent desire to create great code. I creates code that fits yours!