Everybody is looking back at 2025. And I’m taking a look back at 2025 too through the lens of AI. Many things have changed in AI. Where I still used Cursor to generate code for my projects, tell Cursor what code to write and inspecting it at the beginning of the year, today I only work through Claude Code and with Vibe Engineering.

On that backdrop of AI progress I found three moments and insights particular powerful for me:

Code as Meta-Token

Coder vs. Creator

Model vs. Agent

Code as Meta-Token

Many people - including me in the past - have a wrong view on how AI operates when writing code. They believe the AI is a trained developer, “trained” like a developer on code examples and then, depending on the training, making good or bad decisions about what code to write. They imagine the AI being a developer, analyzing the exiting code, analyzing and thinking about the requirements, then finding the best solution and code to implement it. The quality of the code depends on the training of the AI, good source material leads to a well trained AI which creates good code.

This is wrong.

The AI training is very different from training a developer. The AI takes the input and generates the most probable extension to that input. The input - not only being the prompt with the requirements, but also the existing code. If your project is one long source code file, the AI is happy to add to that long file. If you don’t use classes, the AI is happy to also not use classes. The AI is not taking requirements, thinking about the best solution based on the best code they have seen, and then trying to apply it to your code. The AI evolves your code in the most probable way. If the generated code is bad, this says more about your code (or the code in the context window) base than about the training data.

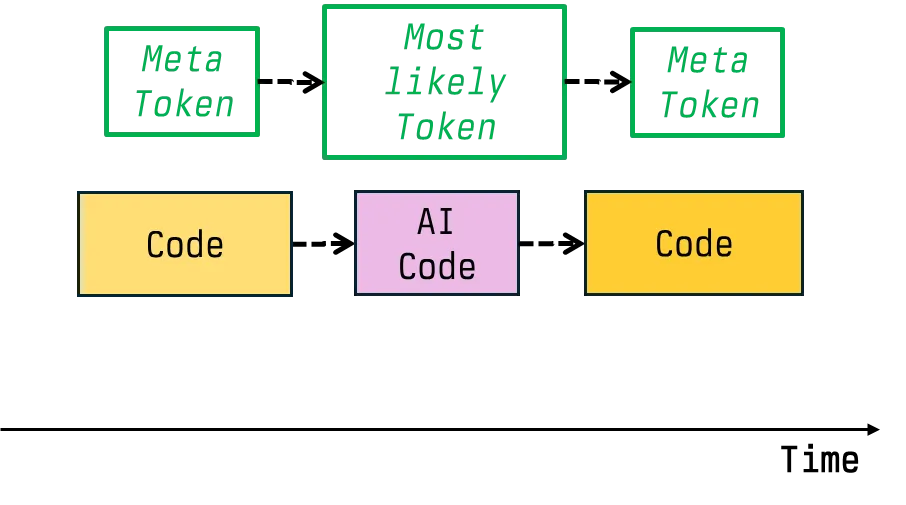

For this it is helpful to think of all your existing code base as a META TOKEN, and the AI generating the next token (the new code) based on the current one. After having understood that, and how refactoring the code from time to time by hand, the code the AI would generate was much better and of higher quality. It will quickly pick up the way of your code, from logging to error handling and structure.

Coder vs. Creator

The second insight from 2025 is that there are coders and creators. For 40 years I thought of myself as a coder. I was in it for writing code. I thought of developers as people who want to write code.

In 2025 I found out that you can split developers into coders and creators. I learned about myself that I was not a coder, but a creator. In 1981 - as a kid - I got into coding because I wanted to play the video game ideas in my head. Creators want to create things, and coding is the tool to do so. Creators want to create apps and games.

Coders on the other hand love the coding, they are in it for coding not creating. They love the tools and identify through programming languages. Myself, I was using more than 20 programming languages over the decades, and I currently like Go a lot, but I’m not tied to any of those languages, or even identify through them. A coder would. A code sees themselves as a Python, or a Rust or a Haskell person. A coder cares mostly about the tool, about the intricacies of the beauty of the code, not the impact. Something I’ve learned, I don’t care about mechanical watches, my quartz Casio is fine - I care about the time more than the inner working of my watch. I do love coding, I do love when it clicks, something complicated works, the brain teasing, the inner beauty of code - a function design that is beautiful - it’s just that creating is more important to me.

Creators will be fine with AI - they will flourish because AI is a super power to create. Coders who identify as such will have a hard time when no more code is written.

Model vs. Agent

My third Eureka moment was understanding the contribution of the agent. People mostly talk about the LLM model, like Opus 4.5 vs. Gemini 3 Pro.

What I’ve learned in 2025 is how important the agent is. Half of what makes an AI good at coding is the agent, the other half is the LLM model. The quality of the code is already good from most models, but how tools are used, if the model overengineers and many more things are tied to the agent and it’s system prompts and prompt wrapping, and the way it uses tooling.

I’ve learned this by using Gemini in the terminal. While the model beats Anthropic models on (some) benchmarks, it’s way worse when actual working with it. It wants to code all the time, there is no planning mode or discussion in Gemini CLI.

Claude Code is currently the best way to Vibe Engineering because the model is great and the CLI agent is great too.

Those were my three Eureka AI moments of 2025 that changed my thinking.

I wonder what the moments of 2026 will be.